What is High Performance Computing?

High performance computing (HPC) is the ability to process data and perform complex calculations at very high speeds. With 1STACK cloud-native HPC platform, you get secure, multi-tenant, bare-metal performance for high-throughput applications such as AI, machine learning and data analytics.

Maximum Performance of Compute Resources

1STACK cloud-native HPC platform, coupled with disaggregated NVMe/TCP storage and GPU pools, delivers optimal bare-metal performance, while natively supports multi-node tenant isolation.

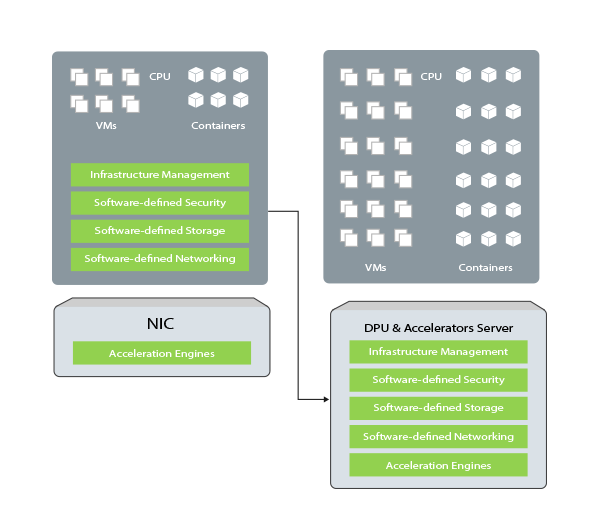

Infrastructure Service Offloads from CPU

Compute nodes equipped with state-of-the-art DPU can seamlessly offload networking, network virtualization, data encryption and compression tasks from CPU, to dramatically optimize infrastructure efficiency.

Infrastructure as a Service composable to meet fluid applications loadings

1STACK as an IaaS enables you to deliver high-value infrastructure and application services for even the most demanding HPC requirements.

1STACK with GPU and DPU to turbocharge your HPC

Maximum Throughput

Compute with maximum efficiency using thousands of cores; Processing parallel operations on multiple data sets.

Data Centric computations

Optimize data movement; offload networking, storage, security workloads from CPU.

Simplified Operations

Provide consistent access to app and data; support more end-users without increasing complexity.

Cost effective growth

Start small and grow with predictable performance and costs.

Faster deployments

Standardize the end-user environment, streamline deployments, and provide flexibility and scalability.

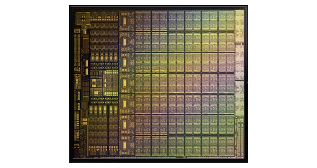

Buff your compute prowess with GPU and DPU

GPU

- Complex parallel processing with TERAFLOPS performance

- Low latency, high-bandwidth direct memory access

- Suitable for graphics rendering, AI/ML and workloads with large data sets

DPU

- Souped-up Ethernet card with on-board packet-processing engine, ARM cores, and high-performance memory

- Offloads infrastructure tasks from CPU, such as TCP networking, encryption, compression/decompression, Erasure Coding

- Dedicate and optimize CPU for real workloads

- Increase server performance

When a DPU is installed inside a high-performance server, it replaces a traditional NIC card, and has the ability to offload non-workload related tasks away from the CPU.

Non-workload tasks such as networking, and storage features such as encryption, compression/decompression, Erasure Coding can be highly CPU intensive. A DPU, with it’s packet processing engine, can perform such tasks faster and cheaper than a CPU. Hence, a DPU enabled server can run more real workloads, and at a faster Input/Ouput to the network. Your server works harder and faster as a result.

Why choose DPU Server ?

Core features of DPU:

- High-speed networking connectivity

- High-speed packet processing with specific acceleration and programmable logic

- A CPU co-processor, ARM or MIPS

- High-speed memory

- Optimized for cryptography and storage offload

- Security and management features, with hardware root of trust

- On-board Linux OS, separate from host system

STARVIEW Brings DPU Technology to Your Data Center

Offload Networking

Secure connectivity can be offloaded to a DPU so that the server host still sees a NIC but extra security layers are obfuscated. This makes a lot of sense when you do not want to necessarily expose the networking details of multi-tenant systems. One can also offload Open Virtual Switch (OVS), firewalls or other applications that require high-speed packet processing.

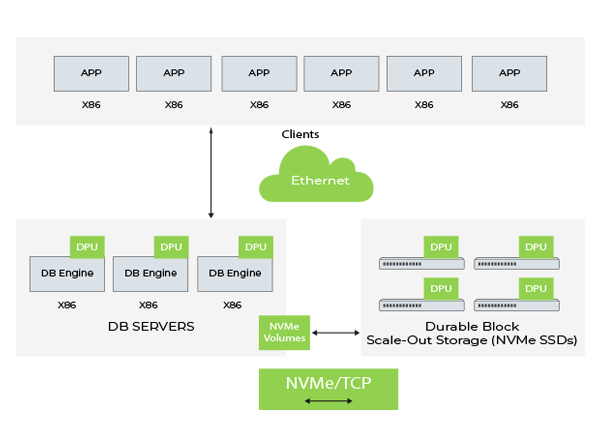

Disaggregated NVMe/TCP storage

With the PCIe root capability, NVMe SSDs can be directly connected to the DPU and then exposed over the networking fabric for other nodes in the data center.

DPU in a host server can also be presented as a standard NVMe device, then on the DPU manage a NVMe/TCP solution where the actual target storage can be located in other servers in other parts of the data center.

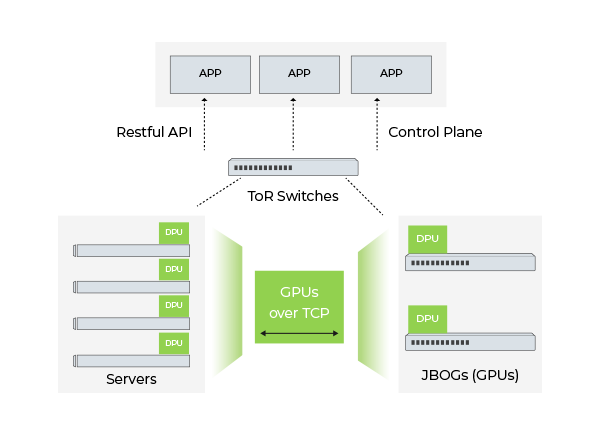

Remote GPUs

By running a separate hypervisor on the PCIe device, the x86 or GPU compute becomes another type of deployable resource. Computing instances can be built using resources at the DPU level and GPUs from multiple servers spanned.

Again, one can also attach GPUs directly to DPUs to expose them to the networking fabric.

BareMetal as a Service

You can also provision an entire bare metal server with the DPU being the enclave of the infrastructure provider, and the remainder of the server being add-ons. High performant bare metal servers can be flexibly built as needed using resources at the DPU level, and attaching storage and GPU from disaggregated NVMe SSD storage and GPU pools, and yet maintaining “locally-attached”-like performance.

Customize your HPC based on your choice of components (server, hypervisor, CPU, RAM, network, SSDs, HDDs) and vendors. With 1STACK you can run your platform on its own or seamlessly integrate it into your broader storage infrastructure.